Containerization has become a major trend in software development with tools like Kubernetes and Docker to help teams better manage their app performance and infrastructure reliability. Valorem Solutions Consultant Muhammed Rizwan explains why this new approach is gaining such popularity among developers and how it can help businesses reduce costs.

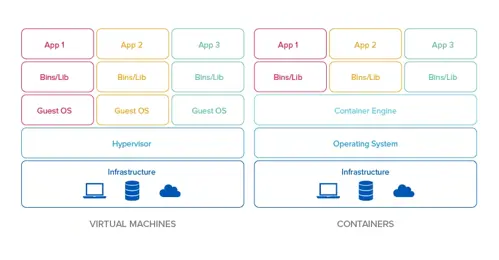

Earlier software deployment was a tough job. Applications were deployed to physical bare-metal servers and sometimes only a portion of memory or CPU was required, leading unutilized resources and missed opportunity for cost savings. Later virtualization came and a single bare-metal server could host multiple virtual machines enabling significant cost savings for a more efficient and scalable IT environment.

Today, the next big revolution in software development is giving way to even more convenient and faster deployment. Using containerization technology developers can further split virtual servers and containerize applications under one operating system for more cost-effectiveness and microservice capability.

Why Containerization?

We have seen containers in shipyards that store and transport goods from one place to another to avoid harm or damage. The container acts as a shield to keep items within for extended periods of time till it reaches the destination. And a ship or a carrier can transport many containers storing goods of all types. What if we can store our applications with all its configuration files, libraries, and dependencies in a compact container for easier and safer storage and deployment? In the world of DevOps such containerization is possible using tools like Docker and Kubernetes. These ecosystems allow containers to work as a process in complete isolation from other containers.

The benefits of this approach is you only pay for one physical host, install one operating system, and run only as much memory and CPU as needed per container for less waste. In addition, a container only takes seconds to spin up, enhancing your applications with speed and instant scalability. Lastly, unlike virtual machines, containerization allows you to add or remove resources on the go, without rebooting the machine for changes to take effect.

Other than speed and memory and CPU utilization, one of the biggest advantages of containerization is microservice architecture where large applications can be broken down to small components, each running in its own container.

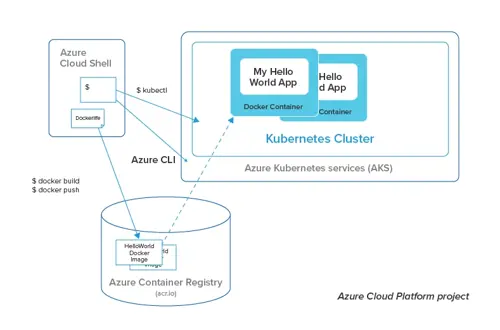

Kubernetes and DockerDocker is the most popular tool in the market to containerize applications for software deployment. It allows you to store multiple containers of different applications in the same Docker host. However, what if the number of users increases/decreases and you need to quickly scale up/down your application? To enable this functionality, we need a platform to automatically deploy and manage the containers, known as container orchestrators. Kubernetes is the most popular container orchestration technology. There are various advantages of using container orchestration. One of the biggest is availability of your application. Since multiple instances of your application running in different nodes, hardware failure would not put your application at risk.

We can do all of these containerization steps and orchestration with a set of declarative configuration files:

Docker’s build command produces images of the container that can be stored in a registry, like a Docker hub or Azure container registry. The sole purpose of container registry is to store the container images used by the Kubernetes for deployment.

Kubernetes runs on a node, which may be physical or virtual depending on which Kubernetes is installed. Node is a worker machine and that is where containers will be launched by Kubernetes. What if the node in which your application is running failed? Having multiple nodes in which our container runs will help to balance the load These grouped nodes form a Kubernetes cluster.

Containerization: Making Infrastructure as Code Easier

Earlier software development, testing, deployment, and monitoring are done in sequence one after another. However with developers interested in quick delivery of changes and operations teams interested in ensuring reliability and stability before release, it has become clear how important collaboration between the two teams throughout this process. Thus DevOps was born. DevOps in conjunction with containerization technologies, like Docker and Kubernetes makes it easy for developers to perform IT operations, build, and deploy software at the quality levels expected by operations. This is where Infrastructure as Code (IAC) can provision and manage the infrastructure using configuration language called YAML. Infrastructure written as code has tremendous benefits. One of them is the ability to keep track of the changes in different source control like Git.

YAML is a text format representing data related to configuration. Kubernetes resources are created in a declarative way using YAML files. These files are stored in the repository and built through Azure DevOps to containerize our application. It is very easy to scale, rollout and rollback versions of your application using YAML file.

Benefits of using Kubernetes deployment YAML:

- Zero downtime- Update to the pod (smallest object in Kubernetes where container runs) do not cause downtime.

- Rollover and rollback update- Kubernetes deployment supports rollover/rollback update allowing you to update the existing application version without causing any application outage. Since Kubernetes maintains a list of recent deployments, it is easy to rollback an update.

- Scaling- It is easy to scale the number of pods using deployment. We can maintain the specific number of pods that should be alive at any one time.

The Future

Containerization technology is very rich in features and is rapidly growing. In the DevOps world Kubernetes and Docker are gaining popularity among developers as they are constantly changing and adapting to new requirements and challenges. As microservice architecture becomes more dominant, containerization tools such as Docker and Kubernetes will greatly help teams manage their containers and infrastructure. In turn, the reduced “time to market” drives innovation speed. Containerization allows much faster delivery of new functionalities. So, it perfectly fit into the continuous development and delivery process.