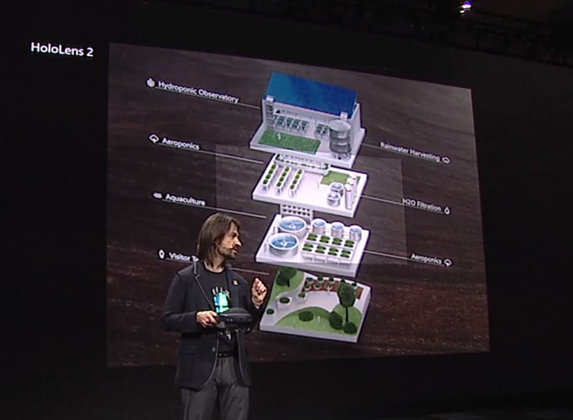

On Sunday, February 24th 2019 Satya Nadella, Alex Kipman and Julia White took the stage at the Mobile World Congress (MWC) in Barcelona to show the world the new HoloLens 2, Azure Kinect and a lot of other new services and products. Valorem Reply's Director of Global Innovation and long-time MR/AR/VR + AI development expert, René Schulte, followed the announcements closely! In this post he’ll share an overview of the announcements and what they mean for today’s business leaders.

HoloLens 2

Valorem Reply has been part of the Mixed Reality journey since day one, as one of the first Microsoft Mixed Reality Partners, developing HoloLens and Mixed Reality application for enterprise clients across a broad range of verticals since 2015.

The introduction of HoloLens 2 made one thing clear, HoloLens is an enterprise device, ready for production usage at scale. Further proven by the fact that Microsoft no longer provides a dev kit, only one SKU of the HoloLens 2 as it’s a production-ready device. While companies like thyssenkrupp have already seen the immense business value of Mixed Reality in large-scale field services, the next generation of HoloLens promises to drive business impact even further. Research firm Gartner predicts that “By 2022, 70% of enterprises will be experimenting with immersive technologies for consumer and enterprise use, and 25% will have deployed to production.” Our friends at BDO also think AR/MR tech is ready and businesses with no plans to adopt now could get left behind.

|

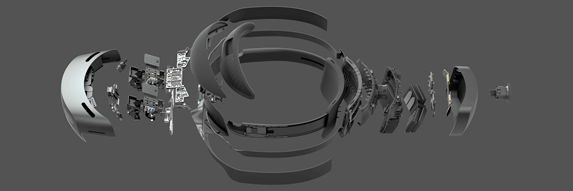

The HoloLens 2 hardware is a great advancement and will further accelerate the adoption at scale in many fields of the modern workplace. It is clear with the new release that Microsoft listened to their customers and addressed all the major downsides they heard about the HoloLens 1. HoloLens and Kinect visionary, Alex Kipman, confirmed an increased field-of-view will more than doubling the area of visible holograms compared to HoloLens 2, while still maintaining the high pixel density and clarity of the waveguide displays.

|

Microsoft also improved the ergonomics of the HoloLens making it much easier to put on and more comfortable to wear for extended periods of time. Another big improvement is that HoloLens 2 users can more easily step out of their Mixed Reality experiences if needed, with a new visor that can flip up. And the HoloLens 2 can still be worn with prescriptive glasses (unlike some other Augmented/Mixed Reality competitors).

|

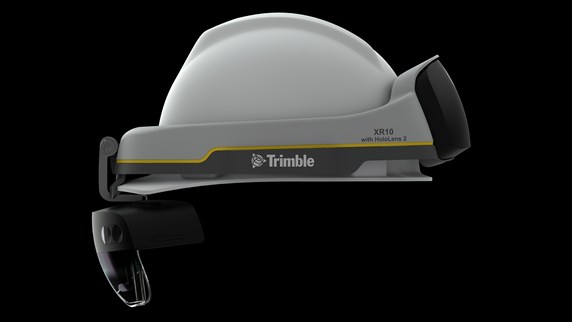

Microsoft took it even a step further and is providing the HoloLens Customization program to help customers fit their environmental and custom needs. The first company to take advantage of that program is Trimble who already offered a hard hat accessory for the HoloLens 1 and now will provide a full-fledged smart hard hat for empowering workers in safety-controlled environments.

At Valorem Reply we could see such customized hardware also bringing big benefits for our mechanical engineering customers.

|

One of the most exciting new features of HoloLens 2 is the improved hand tracking. With the HoloLens 1 users had very limited gesture options like the air-tap and could not directly interact with holograms. The HoloLens 2 offers fully articulated direct hand interactions with 25 points tracked per hand. This means users can directly interact with and touch holograms. HoloLens engineer Julia Schwarz showed off this exciting new advancement at the press event by playing a virtual piano with her hands!

This is an amazing milestone in computing history and will empower Immersive Experience creators like us at Valorem Reply, to create even more outstanding User Experiences (UX).

|

|

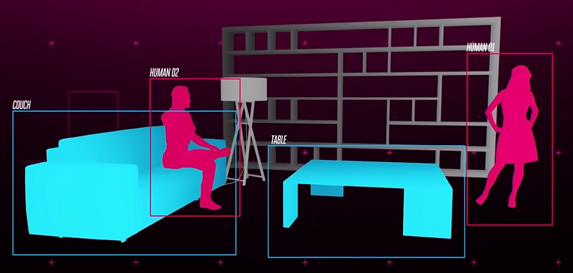

HoloLens real-world mapping was also taken to the next level with the HoloLens 2. With the HoloLens 1 a simple spatial mesh was reconstructed to provide a coarse approximation of the real-world environment, think of it like throwing a blanket over the physical object. HoloLens 2 has semantic understanding to recognize the type of physical objects, not just the shape of them.

|

The improved hand tracking and spatial mapping with semantic understanding are powered by the new Azure Kinect depth sensor (more below) and even more so by the new Holographic Processing Unit (HPU). The HPU v2 is a powerful AI accelerator chip which can run fast Machine Learning model evaluation on the device itself.

AI Deep Learning will dramatically change how Mixed Reality apps perceive their environment. Adding intelligence to applications will provide a truly immersive experience resulting in an intelligent edge scenario for low latency and hardware-accelerated inference on the edge. The HoloLens 2 is perfectly equipped for all of this with the HPU v2.

Valorem Reply is actively working in the field of the intelligent edge and intelligent cloud, with some early results using fast AI inference for custom semantic understanding on the HoloLens 1 in 2018. The video below shows a demo app which leverages a Deep Learning model that was trained to recognize certain objects gaining semantic understanding. The model was exported as ONNX file and then embedded into a HoloLens app leveraging Windows Machine Learning (WinML) for near real-time object recognition. Additionally, the HoloLens’s spatial mesh is being used to measure the distance to objects and provides this info to the user via text-to-speech.

Together with the intelligent cloud, HoloLens 2 will enable amazing use cases that provide business value in many aspects of today’s modern workplace. We can now leverage the best of both worlds for the two different phases of a Machine Learning model: Train the model in the cloud to leverage the cloud computing power and scale for the resource-intense training phase of the model. Then we can run the Deep Learning inference / evaluation on an edge device for lowest latency and real-time results.

|

Unlike HoloLens 1 with the custom Intel Atom x86 CPU, the HoloLens 2 features a Snapdragon 850 CPU with an ARM64 architecture. The full, high-level tech specs are available here.

The device is also fully enterprise-ready supporting a suite of device management tools and integrations into Microsoft products. For example, the new eye-tracking camera not only can be used for input interactions and sentiment analysis (!) but also to provide secure Windows Hello sign-in functionality.

The HoloLens 2 can be preordered now and will be available later this year for $3500 USD as a device or as a subscription together with Dynamics 365 Remote Assist for $125 per user, per month.

The HoloLens 2 won’t be the last HoloLens for sure, tech magazine CNET asked Alex Kipman to share the challenges in creating the HoloLens 2 and his vision for the HoloLens 3 in this insightful video.

Azure Kinect DKMicrosoft had a few more exciting announcements at their MWC press event. For example, the new Kinect sensor called Azure Kinect DK (development kit).

Valorem Reply has been working even with the first Kinect and the Kinect v2 for many years to create compelling and unique Natural User Interfaces (NUI). Recently we have been using the Kinect v2 for our immersive telepresence technology, HoloBeam, which allows teams to have 3D volumetric video conversations using a depth camera like the Kinect and HoloLens (or other viewer devices). Basically, you can see the other person as a 3D hologram, in real-time, instead of just a flat 2D video for more engaging and effective collaboration. HoloBeam is the perfect example of how extended reality is changing the way the world does business. It was even featured at Satya Nadella’s keynote at Inspire and during the Ignite Microsoft 365 keynote to demonstrate the 'future of work' in an era of MR, AI and Data.

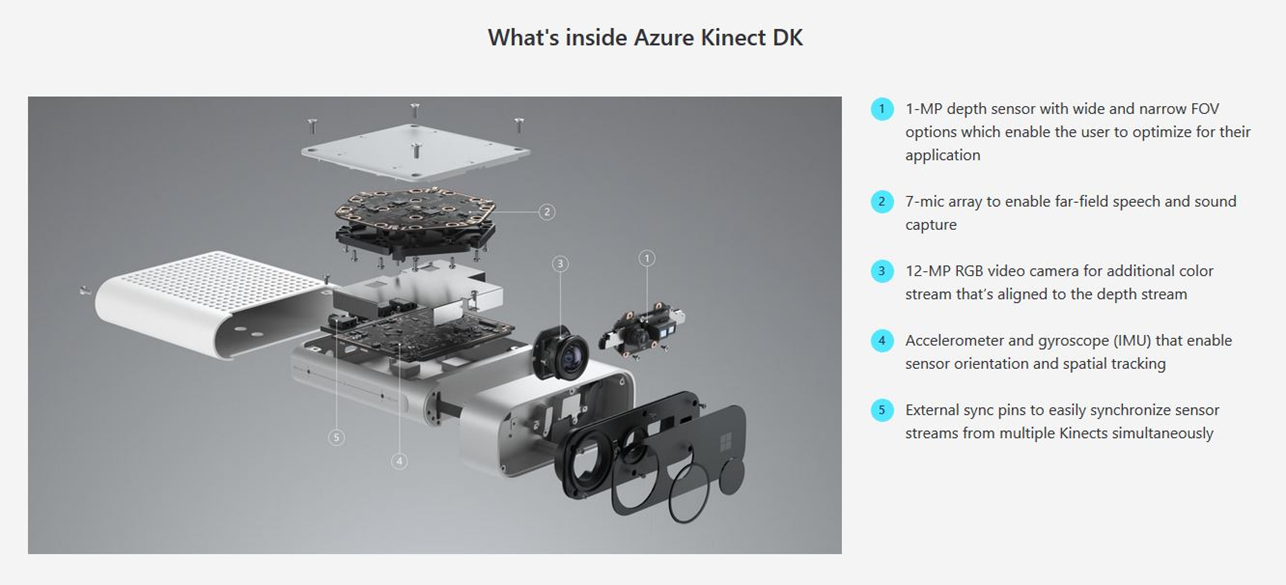

The Azure Kinect DK contains the same time-of-flight depth sensor that is also present in the new HoloLens 2. It can run in different operation modes at various resolutions with framerates in a narrow or wide field-of-view. The quality of the depth point cloud it provides is unseen and without a doubt this is the best-in-class depth sensor available on the market.

In addition to the depth sensor, the Azure Kinect DK also includes a 4k RGB color camera, a 7-microphone array and an IMU with gyroscope and accelerometer built-in. Also, worth noting are the sync pins to connect multiple sensors for a synchronized signal, i.e. 360 captures.

|

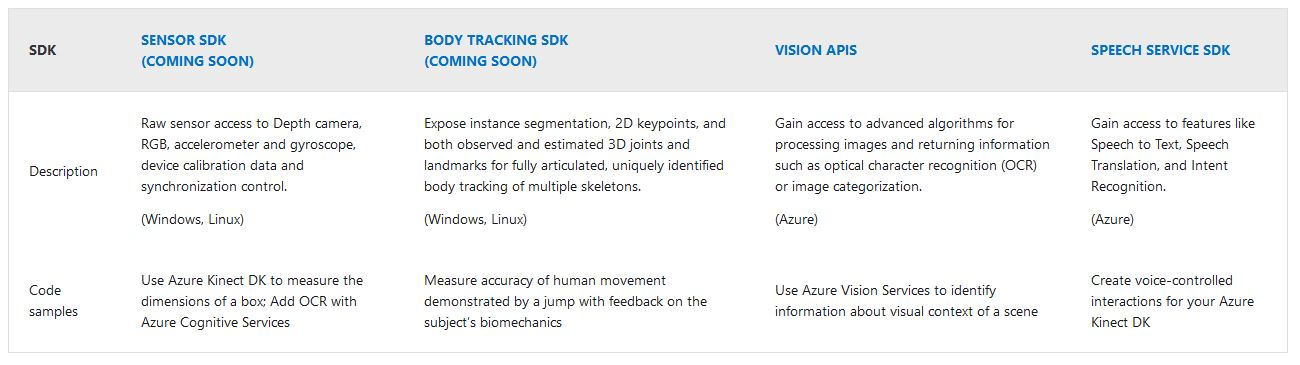

The Azure Kinect DK can be used together with Microsoft Azure Vison and Speech services to even more leverage the power of the intelligent cloud. Furthermore, the device can run standalone with the upcoming Sensor and Body Tracking SDKs connected via USB-C to a Windows or Linux PC.

|

The Azure Kinect DK can be preordered now from the USA or China for $399 USD and shipping will start June 27th, 2019. More information like specs and preliminary documentation can be found here.

We at Valorem Reply are excited for the amazing opportunities the new Azure Kinect DK sensor provides for our industrial clients and beyond. HoloBeam will also highly benefit from the combination of the HoloLens 2 and the Azure Kinect DK with increased resolution and quality.

Azure Mixed Reality ServicesMicrosoft also announced two new Azure Mixed Reality services.

Azure Spatial Anchors realizes what some in the industry call the AR Cloud. Large-scale, multi-user, and cross-platform shared experiences with persisted virtual objects. Azure Spatial Anchors does not just work with the HoloLens but also mobile AR platforms like iOS ARKit and Android ARCore. Scenarios include wayfinding, shared experiences across different devices, but also large-scale, persisted mappings beyond the direct sensor view of the devices. Imagine the real-world mapped in the AR Cloud for large-scale AR experiences beyond just your own device, think Ghost in the Shell and other sci-fi movies!

Azure Remote Rendering allows you to render high-quality 3D content above the rendering capabilities of an untethered device. The service leverages the immense power of Azure GPU machines to render 3D models with 100s of millions of polygons and stream the rendered frames in real-time. Scenarios include large-scale CAD models with intricate details and high-fidelity renderings with compute-intense visual effects.

Valorem Reply also has a strong Cloud computing and Azure focus and we are all excited for the possibilities these new services provide for enhanced user experiences!

Dynamics 365 Guides appThe new Dynamics 365 Guides adds another first-party app to the Microsoft Mixed Reality portfolio that increases the time-to-value for HoloLens customers. Guides empowers workers with immersive trainings via step-by-step instructions that can be authored with a PC application similar to PowerPoint.

Valorem Reply is not just part of the Mixed Reality Partner Program, but also a strong Dynamics partner to further assist our customers in all stages of the digital transformation journey. We also help for various Guides aspects like 3D assets creation/optimization, Guides training authoring and much more.

Open PrinciplesMicrosoft’s Alex Kipman also made three key commitments during MWC:

- “We believe in an open app store model. Developers will have the freedom to create their own stores.”

- “We believe in an open web browsing model.”

- “We believe in an open API surface area and driver model.”

It was announced that Mozilla will bring its experimental Firefox Reality browser to the HoloLens 2. This is exciting as it will provide WebXR experiences and enable many web developers for basic use cases.

Alex was also joined on stage by Tim Sweeney, CEO of EPIC, the creator of the famous Fortnite game and the Unreal 3D engine. Tim announced that the Unreal Engine 4 will be supporting Mixed Reality and HoloLens in May 2019. This was another exciting moment as the Unity and Unreal 3D engines are the most important on the market, both with their own strengths and the Immersive team at Valorem Reply can’t wait to leverage another best-in-class 3D engine for our projects!

ConclusionWithout doubt the new HoloLens 2 is the most innovative high-tech combined in a modular master piece, setting the bar for the industry. The HoloLens 2 with the power of modern AI edge computing provides innovative NUI interactions which is a game changer – again. There’s no competitor on the market with comparable state-of-the-art hardware but also enterprise-ready services and offerings. Microsoft shared an impressive set of more announcements at MWC that could have easily been in their own respective keynote and we are enthusiastic about each.

Valorem Reply is a trusted partner across the Microsoft technology stack and have been developing for the HoloLens since day one. We can’t wait to work together with our clients on amazing projects with these new and improved releases like HoloLens 2, Azure Kinect, Azure MR services and Dynamics 365 to hasten the digital transformation journey and empower humans and the modern workplace with the intelligent edge and the intelligent cloud.

No matter where you are on the extended reality curve, Valorem Reply can help you reach your goals faster. Our Immersive Envisioning Workshops are custom tailored to your needs and are an excellent way to explore opportunities to improve and grow your business with leading edge mixed reality technology. Click HERE or reach out to marketing@valorem.com for more information.