Microsoft recently held the second Ignite conference this year and brought us some exciting announcements. In this post, I’m going to focus on my favorite - emerging technology news Microsoft shared at Ignite Fall 2021, like the Metaverse and I will unpack this hot buzzword we keep on hearing all the time in the tech world right now. I will also cover Mesh for Microsoft Teams, Azure OpenAI services including a new GitHub Copilot demo video and more.

My colleagues also took the chance and recorded their impressions from Ignite November 2021 and shared their highlights including Mesh for Teams of course, but also Loop, Viva and many other digital workplace announcements. You can watch and read about it here.

Video created by Rene using the Ignite Create Zone Pop squared selfie tool.

What is the Metaverse?

First of all, what is the Metaverse? Well, it’s everything and nothing at the moment, where the terminology is used for various things like large online gaming worlds, digital twins, Augmented Reality (AR) and Virtual Reality (VR) experience and more. In a sense, the term is like a Qubit, being in multiple states at the same time.

Wikipedia defines “The metaverse (a portmanteau of "meta-" and "universe" or “Universe” and “Meta”) is a hypothesized iteration of the internet, supporting persistent online 3-D virtual environments through conventional personal computing, as well as virtual and augmented reality headsets.”

The term Metaverse isn’t even new. In fact, it’s almost 30 years old and comes from the sci-fi book Snow Crash which Neal Stephenson wrote in 1992. The Metaverse in Snow Crash is the VR successor of the internet where humans are represented as avatars that interact with each other. Likely the even more popular sci-fi novel Ready Player One from 2011 also included a form of the Metaverse where humans escape the dystopian reality with VR headsets into the virtual world OASIS.

Beside the cultural reference of the Metaverse and even before these books were written, the first experiments with large online worlds were realized where people can gather. For example, in 1985 Lucasfilm Games had already worked on the first online Metaverse-like community with the video game Habitat. In 2017, I was lucky enough to meet the Habitat co-designer Noah Falstein and had some insightful conversations. LucasArts was ahead of its times and a bit too early, since in 1985, computers were not widely deployed and limited network capabilities, effort and cost was much higher than today, so Habitat didn’t really take off but it opened the door for future virtual worlds and the reincarnation of the Metaverse.

Figure 1: Noah Falstein and Rene Schulte talking about the Metaverse in 2017.

Many small and large technology companies (re-)discovered their interest for the Metaverse in 2021 and started working on various parts. There are even lots of virtual worlds or closed Metaverse spaces already in existence like Fortnite, Roblox, Minecraft and social VR apps like AltspaceVR, etc.

Microsoft up to its top leadership sees an enterprise-ready Metaverse also as a top priority and enabler for more collaborative, immersive experiences in a business world beside the pure virtual gaming world. Microsoft CEO, Satya Nadella said it nicely in a HBR interview:

"I think that this entire idea of metaverse is fundamentally this: increasingly, as we embed computing in the real world, you can even embed the real world in computing."

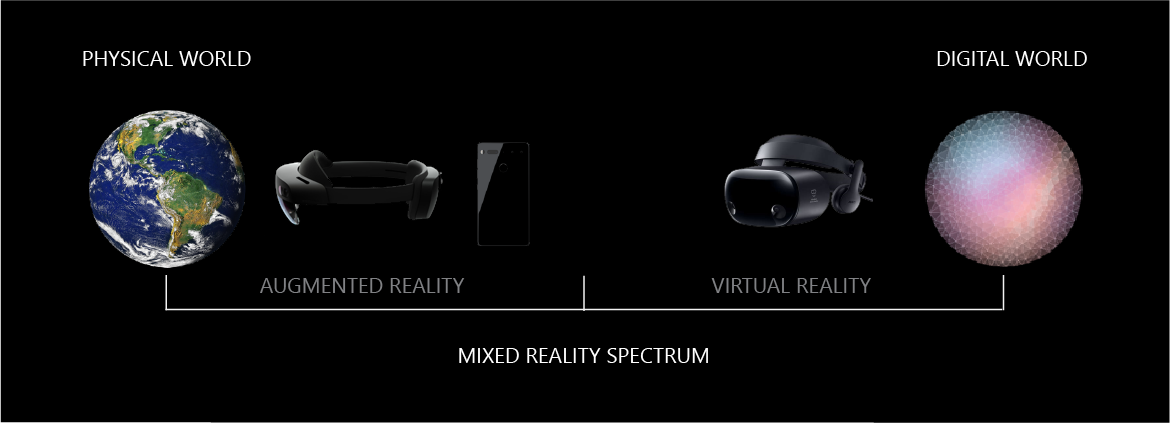

This is a great definition and exactly describes what it’s all about, thanks to Spatial Computing devices that can sense the world around us and digitize it. The physical world can merge with virtual worlds either in the form of Mixed Reality (MR), that is more of a pure immersive virtual world in VR or rather the other end of the MR spectrum where the real-world is dominant and only augmented with virtual objects.

Figure 2: Microsoft's Mixed Reality spectrum (Source: https://docs.microsoft.com/en-us/windows/mixed-reality/discover/mixed-reality#the-mixed-reality-spectrum)

The Metaverse can be all of that and it doesn’t matter if the user is using an AR or VR device or rather a classic 2D device like a PC, console, mobile phone, tablet or any other device that allows them to take part in a 3D world. The cross-platform aspect of the Metaverse is actually the key enabler for a wide adoption together with open protocols that allow to exchange information between various Metaverse spaces regardless of the Metaverse vendor.

In its essence, the Metaverse is a network of computers that are connecting the world’s publicly accessible virtual experiences, 3D and other related media. Some say it’s the new internet, the Immersive Internet where users meet in 3D spaces, places being represented as avatars and share virtual objects to facilitate communication for a wide range of use cases. Today’s websites become 3D interactive spaces in the Metaverse. As Tony Parisi, one of the veterans in Spatial Computing, put it nicely in The Seven Rules of the Metaverse: “A defining characteristic of the Metaverse is spatially organized, predominantly real-time 3D content.”

The Metaverse is surely one of the big trends of 2022 where companies will mainly focus on new experiences. Some even changed their name like Facebook changing its company name to Meta, also, to reflect their broad goals in this space with the large online Horizon Worlds that can be accessed via Meta’s own VR headset Oculus Quest and even with PC devices. One of the motivations is better customer engagement and eCommerce enabled by this more immersive Metaverse experiences, with some even coining the term iCommerce, the term iCommerce for this new form of customer and brand engagement. Metaverse experiences commercialized with advertisements will also benefit from the more detailed user analytics data provided through the user’s interaction in the spaces and the user’s immersive digital representation.

Figure 3: Rene's avatar being at the virtual Burning Man festival 2020 hosted in the Metaverse AltspaceVR.

The user’s own digital identity being represented as an avatar is a very important element and must become something universal that users can take with them from a Metaverse space to another space similar to a profile picture these days. This, of course, is a big challenge. First and foremost because current initiatives are mainly centered around proprietary, locked-in Metaverse solutions but also privacy, identity theft and security should not be dismissed. One solution could be decentralized identity systems where not a certain vendor stores the user’s virtual presence but decentralized technologies like blockchain. In fact, the hype around NFTs could also help to find practical applications in the Metaverse for transactions of virtual objects and even for digital identity and assets. Virtual Presence can be the Digital Twin of the Person representing them as Digital Humans visually, but it can also be fueled with data from wearables and similar. In general, the Digital Twin of the Person (DToP) has quite some potential even outside the Metaverse usage which we covered in an earlier post.

Today’s commonly used comic-like avatars as digital human representation is an intermediate step until AI computer vision can create more photo-realistic avatars based on the actual person’s real appearance. Also, EPIC Games and NVIDIA are working on innovative projects for photorealistic Digital Humans. Ultimately, 3D volumetric video and human holograms with solutions like HoloBeam will provide another option in the future once it is practically usable.

Enterprise Metaverse

Whereas companies like Meta or EPIC Games build their locked-in, mainly closed ecosystems of Metaverse spaces for consumer entertainment scenarios (which they know very well) like gaming and online social experiences, companies like NVIDIA are expanding them into 3D, seeing the potential of their Omniverse platform for scenarios like Digital Twins, simulation, 3D content creation collaboration and other professional use cases. Open connector and interface systems allow other vendors to plug-in NVIDIA’s Omniverse, allowing them to provide extra services and connectivity into proprietary systems.

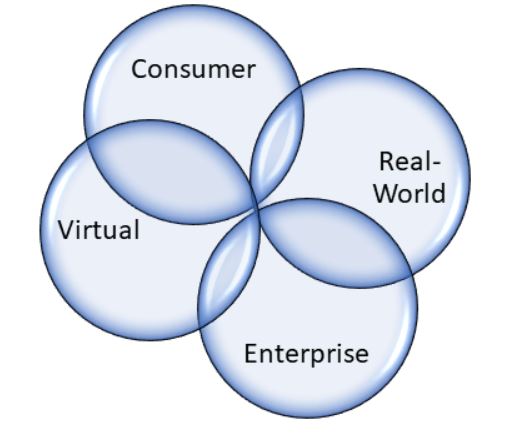

Microsoft follows a similar approach and wants to provide open connectors into their offerings and focuses more on Microsoft’s strength within the professional, workplace and enterprise sector, including additional services powered by the Azure cloud. Therefore, it might make sense to differentiate based on usage scenario between a Consumer Metaverse and a Workplace Metaverse or Enterprise Metaverse. Another differentiator can be the user experience, where the user can dive into a fully virtual world in a Virtual Metaverse or Immersive Metaverse suited for 2D and social VR or rather a Real-World Metaverse suited for AR, leveraging Spatial Computing and the AR Cloud with Cloud Spatial Anchors, where Microsoft is one of the leaders with its Mixed Reality team. In general, as Microsoft CEO Satya Nadella said, it is more about connecting the physical real world with the digital world for shared experiences between both.

Other companies like Niantic known from Pokémon Go and other mobile AR games are also working on the Real-World Metaverse implementation with their Lightship Platform that they are now opening for external developers.

An open and inter-connected Metaverse where identities, objects and other assets can be shared between various Metaverse spaces is a key ingredient and being able to link and take these assets with you throughout various Metaverse rooms is a central part. Microsoft CVP and MR visionary Alex Kipman summarized it well in a recent interview: “There’s a world out there that thinks there will be a metaverse, and everyone will live in ‘my’ metaverse. That, to me, is a dystopian view of the future,” says Kipman. “I subscribe to a multiverse. Every website today is a metaverse tomorrow. What makes the internet interesting—opposed to [old platforms like] AOL and CompuServe—is linking between websites.”

Microsoft also released a video describing their vision and implementation of the Metaverse:

Alex Kipman and his teams have been working on immersive workspace collaboration tools like Microsoft Mesh since a while now. Mesh enables the vision that everyone in a meeting can be present without being physically present using a virtual presence as avatars and later 3D volumetric video Holoportation together in immersive spaces that can be accessed from multiple devices without the need for special equipment. The core of Mesh is a platform where developers can build their own custom applications which are interconnected with Mesh in this enterprise Metaverse powered by Microsoft’s Azure cloud. Enterprise Metaverse spaces or Corporate Metaverse rooms will vary in size and scope but have a consistent experience for the user powered by Mesh providing the foundational glue that helps to hold the multiverse of worlds together. Jesse Havens and I recorded a demo of Microsoft Mesh App Preview running on the HoloLens 2 earlier this year, where we both felt much more connected thanks to the immersive presence avatars supporting eye tracking mapped to virtual eye contact. More details about Mesh and the full demo video can be found in an earlier post.

Figure 4: Microsoft HoloLens 2 Mesh app Preview in-action for Enterprise Metaverse collaboration across 9 time zones.

Mesh for Teams

At Ignite Fall 2021 Microsoft unveiled Mesh for Microsoft Teams which Microsoft imagines as the broad entry point for the Enterprise Metaverse, where companies can set up their own Corporate Metaverse spaces. Mesh for Teams combines the MR capabilities of Mesh with the productivity tools of Microsoft Teams, where people can send chats, join virtual meetings, collaborate on shared documents and more. Satya Nadella stated that video transcending to 2D avatars and 3D immersive meetings is probably a practical way for us to think about how the Metaverse really emerges. Nadella also mentioned that Teams already does some of the computer vision to embed the real world in computing: “when you go to a Team’s room, it will even segment everybody in a conference room into their own square, put them back in a meeting as if they were joining remotely. That way then the remote participant can find people who are even sitting in a conference room, identify them, get their profile, and what have you. So that’s a great example of what is physical, has become digitized. And you have people still meeting in a physical space and people who are remote but it’s all bridged.”

Mesh for Teams is bringing MR and immersive Metaverse spaces for front-line workers into workloads for knowledge workers with Microsoft Teams which is already widely deployed at enterprise companies, which Alex Kipman sees as a gateway to the Metaverse – to show an audience of 250 million users a new approach to remote and hybrid work. Mesh for Teams allows knowledge workers to build and use self-expressive avatars and experience immersive spaces.

Mesh for Teams will work on PCs, smartphones and MR headsets and will allow people to gather, communicate, collaborate and share with personal virtual presence on any device. Mesh for Teams will begin rolling out in the first half of 2022, with a set of pre-built spaces for a variety of contexts like meetings and social gathering. Later, corporations will be able to create their custom immersive Metaverse spaces with Mesh and deploy them to Teams. Users will also have a variety of options for unique, personalized avatars and will be able to join any Teams meeting represented as an avatar or show themselves on video. If the user is on an audio-only call, Microsoft uses an AI model that will turn the audio signals into believable animations with proper mouth shapes for the avatar and drives accompanying gestures and expressions. If the user is using a webcam, a gesture tracking AI will be used that animates the avatar with greater accuracy.

Mesh for Teams as an entry point for the Enterprise Metaverse is also an implementation of Ambient Virtual Meetings (AVM) which we predicted in our Top 10 Tech Trends 2021 here, allowing for more immersive virtual meetings. Corporate Metaverse spaces with Mesh are not just a great way to increase engagement and have better immersive virtual meetings but Microsoft’s own human-factors lab has found out that people get digitally exhausted by appearing on video. Therefore, being not just a static photo but represented as a Digital Human avatar helps to reduce that stress while 70% of other people in the meeting feel that person is present.

Metaverse Types

As mentioned above the Metaverse can come in various implementations with different use cases and target audiences. So, it might make sense to group them in a Venn diagram showing where overlaps occur. Consumers will have a stronger tendency to use full virtual Metaverse worlds, like what Meta is building, whereas the Enterprise is getting business value out of real-world Metaverse implementations like what Microsoft and NVIDIA are working on.

Figure 5: Various Metaverse types grouped by scenarios

Azure Open AI ServicesMy second top most exciting announcement at Ignite was the introduction of the Azure OpenAI Service, which brings the power of OpenAI’s GPT-3 to more developers. GPT-3 is a very large AI transformer language model which is capable of some mind-blowing things like summarization of text content or creation from short snippets, for example GPT-3 can generate a full text copy from a very small text input and can complete multiple pages of text with relevant, meaningful content having such a good quality that it is difficult to determine whether the text was written by a human or not.

GPT-3 is a huge model with the full version having 175 billion parameters that can only be used with the power of the cloud and distributed computing. In 2019 Microsoft invested into OpenAI and offered their cloud capabilities and as a return licensed GPT-3. The Azure OpenAI Service now brings the power of that model to many more developers with the scalability and security of the Azure cloud. The pre-trained GPT-3 model variations can even be fine-tuned for specific use cases leveraging a few-shot learning method where developers can provide custom training data via a REST API to achieve more relevant results. The security and privacy aspect of Azure is in fact a very important part, like we wrote in our recent AI Security article, even more so with such a powerful model that also has large potential to be misused. That is also why the OpenAI Service adds filters and content moderation on top of GPT-3 to ensure that models are used for their intended purpose. Additionally, Microsoft does not provide public self-serving access but developers, who want to use the Azure OpenAI Service, have to apply and request an invite to ensure responsible usage of this powerful technology. The ethical and responsible usage of AI technologies is a very important factor with the ever-increasing capabilities of Deep Learning and Machine Learning models. You can read more about Digital Ethics and Responsible AI in one of our Summer of AI article.

A variation of GPT-3 called OpenAI Codex is already available in a preview via GitHub’s Copilot tool, providing AI-assisted programming for developers via a Visual Studio Code plugin. OpenAI Codex is a descendant of GPT-3 being trained with natural language and billions of lines of source code from GitHub open-source repositories and more. Copilot is like an AI pair programmer that understands natural language like code comments and turns it into actual source code. AI-Augmented development is a growing field, as we wrote in another Summer of AI article covering the democratization of AI.

I recently got early access to GitHub Copilot and was really impressed with its capabilities like generating algorithmic code and moreSee for yourself in this short demo video I recorded:

There’s more!

Of course, Ignite had much more to offer including updates to the Industry Cloud for Financial Services, the preview of the Microsoft Cloud for Manufacturing, Healthcare, Sustainability and the Microsoft Cloud for Nonprofit which we are also strongly interested as part of our Valorem for Nonprofit and Tech for social Impact solution area.

The best way to get more details and read about all the other exciting news from Ignite, is the official Ignite Book of News. Also, make sure to watch the recap videos and Ignite impressions from my colleagues here.

Where to get started?

Valorem and the global Reply network are here to help with our experienced teams of immersive, AI and more experts that can meet you where you are on your journey. If you have a project in mind or would like to talk about your path to success within the Metaverse or outside, we would love to hear about it! Reach out to us as marketing@valorem.com to schedule time with one of our experts.